Burst Function Pointers vs. Jobs

Function pointers aren’t the only way to express a unit of work. Unity’s own job system does just this, albeit in a different way. Today we’ll compare the performance of Burst’s function pointers against jobs themselves!

A recent comment by Neil Henning of Unity points us to the Burst 1.3 documentation. While Burst 1.3 is still in preview, the documentation contains helpful hints on the appropriate and inappropriate usage of function pointers. After showing some examples, it makes a performance claim:

The above will run 1.26x faster than the batched function pointer example, and 1.93x faster than the non-batched function pointer examples above.

To confirm, I ported these examples over to a testing script. The only change was to give them unique names and fix a couple of typos.

On top of this, I added some performance-testing code. I used 1000-element arrays and a batch count of 64. I then averaged the run times of each job over 100 frames.

Here’s the resulting script:

using System.Diagnostics; using UnityEngine; using Unity.Burst; using Unity.Collections; using Unity.Collections.LowLevel.Unsafe; using Unity.Jobs; using Unity.Mathematics; [BurstCompile(CompileSynchronously = true)] struct PlainJob : IJobParallelFor { [ReadOnly] public NativeArray<float> Input; [WriteOnly] public NativeArray<float> Output; public void Execute(int index) { Output[index] = math.sqrt(Input[index]); } } [BurstCompile(CompileSynchronously = true)] public class BatchFunctionPointers { public unsafe delegate void MyFunctionPointerDelegate(int count, float* input, float* output); [BurstCompile(CompileSynchronously = true)] public static unsafe void MyFunctionPointer(int count, float* input, float* output) { for (int i = 0; i < count; i++) { output[i] = math.sqrt(input[i]); } } } [BurstCompile(CompileSynchronously = true)] struct BatchJob : IJobParallelForBatch { public FunctionPointer<BatchFunctionPointers.MyFunctionPointerDelegate> FunctionPointer; [ReadOnly] public NativeArray<float> Input; [WriteOnly] public NativeArray<float> Output; public unsafe void Execute(int index, int count) { var inputPtr = (float*)Input.GetUnsafeReadOnlyPtr() + index; var outputPtr = (float*)Output.GetUnsafePtr() + index; FunctionPointer.Invoke(count, inputPtr, outputPtr); } } [BurstCompile(CompileSynchronously = true)] public class SingleFunctionPointers { public unsafe delegate void MyFunctionPointerDelegate(float* input, float* output); [BurstCompile(CompileSynchronously = true)] public static unsafe void MyFunctionPointer(float* input, float* output) { *output = math.sqrt(*input); } } [BurstCompile(CompileSynchronously = true)] struct SingleJob : IJobParallelFor { public FunctionPointer<SingleFunctionPointers.MyFunctionPointerDelegate> FunctionPointer; [ReadOnly] public NativeArray<float> Input; [WriteOnly] public NativeArray<float> Output; public unsafe void Execute(int index) { var inputPtr = (float*)Input.GetUnsafeReadOnlyPtr(); var outputPtr = (float*)Output.GetUnsafePtr(); FunctionPointer.Invoke(inputPtr + index, outputPtr + index); } } class TestScript : MonoBehaviour { private const int len = 10000; private const int batchCount = 64; private NativeArray<float> m_Input; private NativeArray<float> m_Output; private FunctionPointer<BatchFunctionPointers.MyFunctionPointerDelegate> m_BatchFp; private FunctionPointer<SingleFunctionPointers.MyFunctionPointerDelegate> m_SingleFp; private PlainJob m_PlainJob; private BatchJob m_BatchJob; private SingleJob m_SingleJob; private Stopwatch m_Stopwatch; private long plainTicks; private long batchTicks; private long singleTicks; private long numFrames; unsafe void Start() { m_Input = new NativeArray<float>(len, Allocator.Persistent); m_Output = new NativeArray<float>(len, Allocator.Persistent); m_BatchFp = BurstCompiler.CompileFunctionPointer< BatchFunctionPointers.MyFunctionPointerDelegate>( BatchFunctionPointers.MyFunctionPointer); m_SingleFp = BurstCompiler.CompileFunctionPointer< SingleFunctionPointers.MyFunctionPointerDelegate>( SingleFunctionPointers.MyFunctionPointer); m_PlainJob = new PlainJob { Input = m_Input, Output = m_Output }; m_BatchJob = new BatchJob { FunctionPointer = m_BatchFp, Input = m_Input, Output = m_Output }; m_SingleJob = new SingleJob { FunctionPointer = m_SingleFp, Input = m_Input, Output = m_Output }; // Warmup m_PlainJob.Schedule(len, batchCount).Complete(); m_BatchJob.ScheduleBatch(len, batchCount).Complete(); m_SingleJob.Schedule(len, batchCount).Complete(); m_Stopwatch = Stopwatch.StartNew(); } void Update() { m_Stopwatch.Restart(); m_PlainJob.Schedule(len, batchCount).Complete(); plainTicks += m_Stopwatch.ElapsedTicks; m_Stopwatch.Restart(); m_BatchJob.ScheduleBatch(len, batchCount).Complete(); batchTicks += m_Stopwatch.ElapsedTicks; m_Stopwatch.Restart(); m_SingleJob.Schedule(len, batchCount).Complete(); singleTicks += m_Stopwatch.ElapsedTicks; numFrames++; if (numFrames == 100) { enabled = false; } } void OnDisable() { m_Input.Dispose(); m_Output.Dispose(); print( "Job,Ticksn" + $"Plain,{plainTicks/numFrames}\n" + $"Batch,{batchTicks/numFrames}\n" + $"Single,{singleTicks/numFrames}\n"); } }

I ran the test in this environment:

- 2.7 Ghz Intel Core i7-6820HQ

- macOS 10.15.3

- Unity 2019.3.5f1

- Burst package 1.2.3

- Jobs package preview.11 0.2.7

- macOS Standalone

- .NET 4.x scripting runtime version and API compatibility level

- IL2CPP

- Non-development

- 640×480, Fastest, Windowed

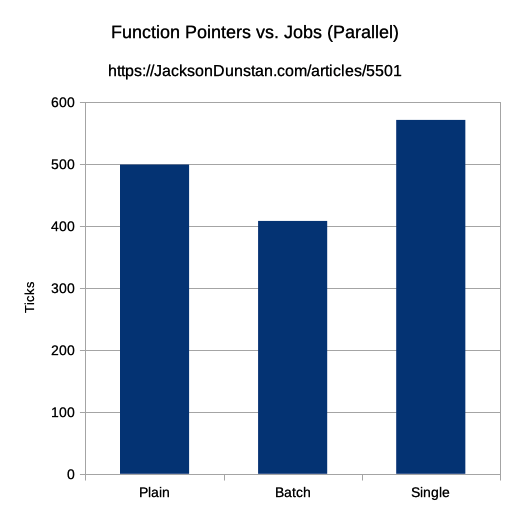

And here are the results I got:

| Job | Ticks |

|---|---|

| Plain | 499 |

| Batch | 408 |

| Single | 571 |

Both the plain (i.e. just a job, no function pointer) time and the batch (i.e. IJobParallelForBatch) times are indeed better than the single (i.e. IJobParallelFor calling a function pointer) time. The speedup was 1.4x for the batch job and 1.14x for the plain job. That doesn’t line up with the claimed speedups, but differences in hardware or software may account for that. Especially mysterious is that the plain time is longer than the batch time. The claimed ordering, and the ordering that makes most sense, would have it the other way around.

To try again, let’s convert the jobs to all be IJob rather than IJobParalleFor and IJobParallelForBatch:

using System.Diagnostics; using UnityEngine; using Unity.Burst; using Unity.Collections; using Unity.Collections.LowLevel.Unsafe; using Unity.Jobs; using Unity.Mathematics; [BurstCompile(CompileSynchronously = true)] struct PlainJob : IJob { [ReadOnly] public NativeArray<float> Input; [WriteOnly] public NativeArray<float> Output; public void Execute() { for (int i = 0; i < Input.Length; ++i) { Output[i] = math.sqrt(Input[i]); } } } [BurstCompile(CompileSynchronously = true)] public class BatchFunctionPointers { public unsafe delegate void MyFunctionPointerDelegate(int count, float* input, float* output); [BurstCompile(CompileSynchronously = true)] public static unsafe void MyFunctionPointer(int count, float* input, float* output) { for (int i = 0; i < count; i++) { output[i] = math.sqrt(input[i]); } } } [BurstCompile(CompileSynchronously = true)] struct BatchJob : IJob { public FunctionPointer<BatchFunctionPointers.MyFunctionPointerDelegate> FunctionPointer; [ReadOnly] public NativeArray<float> Input; [WriteOnly] public NativeArray<float> Output; public unsafe void Execute() { var inputPtr = (float*)Input.GetUnsafeReadOnlyPtr(); var outputPtr = (float*)Output.GetUnsafePtr(); FunctionPointer.Invoke(Input.Length, inputPtr, outputPtr); } } [BurstCompile(CompileSynchronously = true)] public class SingleFunctionPointers { public unsafe delegate void MyFunctionPointerDelegate(float* input, float* output); [BurstCompile(CompileSynchronously = true)] public static unsafe void MyFunctionPointer(float* input, float* output) { *output = math.sqrt(*input); } } [BurstCompile(CompileSynchronously = true)] struct SingleJob : IJob { public FunctionPointer<SingleFunctionPointers.MyFunctionPointerDelegate> FunctionPointer; [ReadOnly] public NativeArray<float> Input; [WriteOnly] public NativeArray<float> Output; public unsafe void Execute() { for (int index = 0; index < Input.Length; ++index) { var inputPtr = (float*)Input.GetUnsafeReadOnlyPtr(); var outputPtr = (float*)Output.GetUnsafePtr(); FunctionPointer.Invoke(inputPtr + index, outputPtr + index); } } } class TestScript : MonoBehaviour { private const int Len = 10000; private NativeArray<float> m_Input; private NativeArray<float> m_Output; private FunctionPointer<BatchFunctionPointers.MyFunctionPointerDelegate> m_BatchFp; private FunctionPointer<SingleFunctionPointers.MyFunctionPointerDelegate> m_SingleFp; private PlainJob m_PlainJob; private BatchJob m_BatchJob; private SingleJob m_SingleJob; private Stopwatch m_Stopwatch; private long plainTicks; private long batchTicks; private long singleTicks; private long numFrames; unsafe void Start() { m_Input = new NativeArray<float>(Len, Allocator.Persistent); m_Output = new NativeArray<float>(Len, Allocator.Persistent); m_BatchFp = BurstCompiler.CompileFunctionPointer< BatchFunctionPointers.MyFunctionPointerDelegate>( BatchFunctionPointers.MyFunctionPointer); m_SingleFp = BurstCompiler.CompileFunctionPointer< SingleFunctionPointers.MyFunctionPointerDelegate>( SingleFunctionPointers.MyFunctionPointer); m_PlainJob = new PlainJob { Input = m_Input, Output = m_Output }; m_BatchJob = new BatchJob { FunctionPointer = m_BatchFp, Input = m_Input, Output = m_Output }; m_SingleJob = new SingleJob { FunctionPointer = m_SingleFp, Input = m_Input, Output = m_Output }; // Warmup m_PlainJob.Run(); m_BatchJob.Run(); m_SingleJob.Run(); m_Stopwatch = Stopwatch.StartNew(); } void Update() { m_Stopwatch.Restart(); m_BatchJob.Run(); batchTicks += m_Stopwatch.ElapsedTicks; m_Stopwatch.Restart(); m_SingleJob.Run(); singleTicks += m_Stopwatch.ElapsedTicks; m_Stopwatch.Restart(); m_PlainJob.Run(); plainTicks += m_Stopwatch.ElapsedTicks; numFrames++; if (numFrames == 100) { enabled = false; } } void OnDisable() { m_Input.Dispose(); m_Output.Dispose(); print( "Job,Ticksn" + $"Plain,{plainTicks/numFrames}\n" + $"Batch,{batchTicks/numFrames}\n" + $"Single,{singleTicks/numFrames}\n"); } }

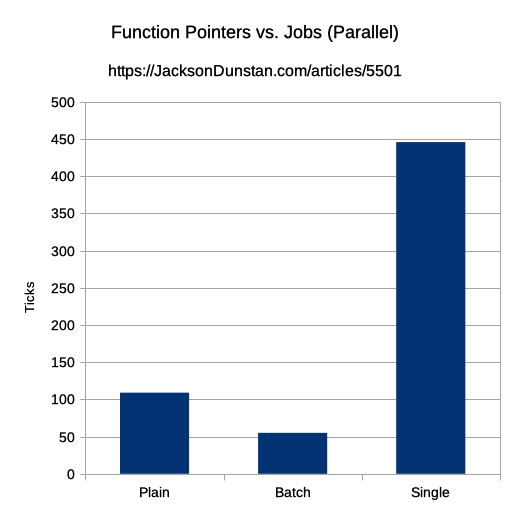

Running in the same environment, I got these results:

| Job | Ticks |

|---|---|

| Plain | 109 |

| Batch | 55 |

| Single | 446 |

Here we see the plain job at a 4.09x speedup and the batch job at 8.11x! Those numbers are much larger than with the parallel tests, but the ordering of the plain and batch job times is still reversed.

While the claimed numbers don’t quite line up to the numbers above, and that is a mystery, one thing is clear: repeatedly calling a function pointer to perform one iteration of a loop will be quite a lot slower than either a plain loop or calling a function pointer with a batch of work. So the advice in the documentation stands. It’s definitely best to avoid very heavy use of function pointers in performance-critical workloads.

#1 by Neil Henning on March 30th, 2020 ·

Great post – thanks for firstly taking my advice on board and secondly for doing such an awesome investigation of your own.

Keep up the good work!

#2 by Chris on April 1st, 2020 ·

Love these investigations!

I expect that the batch cases are faster because they effectively strip some of the safety checks off of the array index lookups.