Allocating Memory Within a Job

What do you do when a job you’re writing needs to allocate memory? You could allocate it outside of the job and pass it in, but that presents several problems. You can also allocate memory from within a job. Today we’ll look into how that works and some limitations that come along with it.

The Test

We’re going to try allocating memory within a job, but we’d like to learn more than just whether the basics work or not. So we’ll port these distance calculations. This will tell us how close together the allocations are so we can compare with allocations made outside of a job.

The resulting test is quite small and consists of just two parts:

- A job that allocates a bunch of 4-byte blocks with 4-byte alignment. It then computes the distance between those blocks. The block pointers and then the distances are stored in an array parameter.

- A

MonoBehaviourthat allocates the array parameter and runs the job with it. It then prints a little report with the calculated distances.

Here’s how this looks:

using System.IO; using System.Text; using Unity.Burst; using Unity.Collections; using Unity.Collections.LowLevel.Unsafe; using Unity.Jobs; using UnityEngine; [BurstCompile] unsafe struct AllocatorJob : IJob { public NativeArray<long> Distances; public void Execute() { for (int i = 0; i < Distances.Length; ++i) { Distances[i] = (long)UnsafeUtility.Malloc(4, 4, Allocator.Temp); } for (int i = 1; i < Distances.Length; ++i) { Distances[i - 1] = Distances[i] - (Distances[i - 1] + 4); } } } class TestScript : MonoBehaviour { private void Start() { using (var distances = new NativeArray<long>(1000, Allocator.TempJob)) { new AllocatorJob { Distances = distances }.Schedule().Complete(); StringBuilder distReport = new StringBuilder(distances.Length * 32); for (int i = 0; i < distances.Length - 1; ++i) { distReport.Append(distances[i]).Append('n'); } string fileName = "distances.csv"; string distPath = Path.Combine(Application.dataPath, fileName); File.WriteAllText(distPath, distReport.ToString()); } } }

Test Results

I ran the test in this environment:

- 2.7 Ghz Intel Core i7-6820HQ

- macOS 10.15.2

- Unity 2019.2.19f1

- macOS Standalone

- .NET 4.x scripting runtime version and API compatibility level

- IL2CPP

- Non-development

- 640×480, Fastest, Windowed

And here are the results I got:

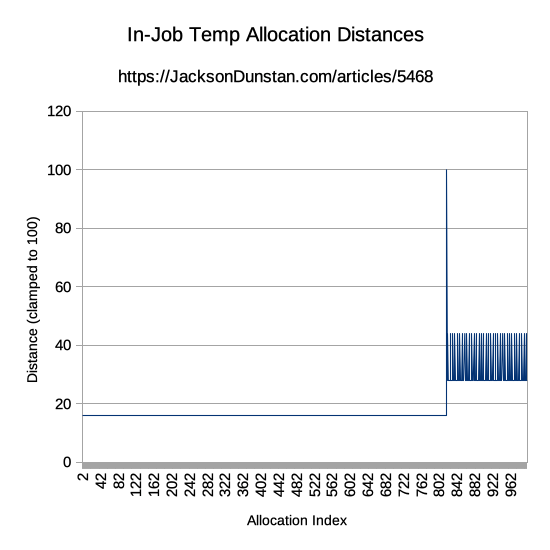

The graph looks exactly the same as last time, even down to the “overflow” point on the 820th allocation. That’s likely to be the point where the fixed-size block of memory backing the Temp allocator is exhausted and an alternative allocator (TempJob?) is then used. The same repeating cycle of 28- and 44-byte distances are observed after that point, just like they were before with Temp overflows and TempJob.

Now let’s look at the Burst Inspector to see the disassembly the job compiled to. I’ve trimmed it down and annotated the call to UnsafeUtility.Malloc:

push r15 push r14 push r13 push r12 push rbx mov r12, rdi movsxd r15, dword ptr [r12 + 8] test r15, r15 jle .LBB0_12 mov r14d, r15d xor ebx, ebx movabs r13, offset ".LUnity.Collections.LowLevel.Unsafe.UnsafeUtility::Malloc_Ptr" .LBB0_2: mov edi, 4 mov esi, 4 mov edx, 2 call qword ptr [r13] ; <--- call to UnsafeUtility.Malloc mov rcx, qword ptr [r12] mov qword ptr [rcx + 8*rbx], rax inc rbx cmp r15, rbx jne .LBB0_2 cmp r14d, 2 jl .LBB0_12 mov rdx, qword ptr [rcx] lea rax, [r14 - 1] cmp rax, 4 jae .LBB0_6 mov esi, 1 jmp .LBB0_10 .LBB0_6: add r15d, 3 and r15d, 3 sub rax, r15 movq xmm0, rdx pshufd xmm0, xmm0, 68 lea rdx, [rcx + 24] movabs rsi, offset .LCPI0_0 movdqa xmm1, xmmword ptr [rsi] lea rsi, [rax + 1] .LBB0_7: movdqu xmm2, xmmword ptr [rdx - 16] movdqa xmm3, xmm2 palignr xmm3, xmm0, 8 movdqu xmm0, xmmword ptr [rdx] movdqa xmm4, xmm0 palignr xmm4, xmm2, 8 movdqa xmm5, xmm1 psubq xmm5, xmm3 movdqa xmm3, xmm1 psubq xmm3, xmm4 paddq xmm5, xmm2 paddq xmm3, xmm0 movdqu xmmword ptr [rdx - 24], xmm5 movdqu xmmword ptr [rdx - 8], xmm3 add rdx, 32 add rax, -4 jne .LBB0_7 test r15d, r15d je .LBB0_12 pextrq rdx, xmm0, 1 .LBB0_10: sub r14, rsi lea rax, [rcx + 8*rsi] .LBB0_11: mov rcx, -4 sub rcx, rdx mov rdx, qword ptr [rax] add rcx, rdx mov qword ptr [rax - 8], rcx add rax, 8 dec r14 jne .LBB0_11 .LBB0_12: pop rbx pop r12 pop r13 pop r14 pop r15 ret

The major takeaway here is that the engine is indeed being called to allocate memory dynamically. It hasn’t been replaced by stack allocation or some other trick.

Sharing the Block

Let’s insert a block of code at the start of TestScript.Start to use up some of the fixed-size block before the job runs:

for (int i = 0; i < 100; ++i) { UnsafeUtility.Malloc(4, 4, Allocator.Temp); }

Running the test again, we still see that the overflow still occurs on the 820th allocation. This means the allocations performed outside the job aren’t affecting the allocations performed inside the job. Is this because there’s one fixed-sized block of memory per thread? The details are inside the engine code, so we don’t really know.

Other Allocators

Next, we’ll try using the TempJob and Persistent allocators within the job. Since these aren’t deallocated automatically by Unity, we’ll also need to add a loop at the end of the test to handle that:

for (int i = 0; i < Distances.Length; ++i) { UnsafeUtility.Free((void*)Distances[i], Allocator.TempJob); // or Allocator.Persistent }

Running this causes Unity to spike to 100% CPU utilization and hang forever. No warning or error messages are printed, so it’s unclear why this happens. If we add the above code into the Temp allocator version, it works just fine.

Collections in Jobs

Now let’s try using a native collection inside a job rather than using UnsafeUtility.Malloc and directly accessing the memory via the returned pointer. As a contrived example, let’s write a job that checks if an array of integers from 0 to 9 has any duplicates. If it doesn’t, we’ll call it “unique.” Here’s how that’d look:

using Unity.Burst; using Unity.Collections; using Unity.Jobs; using UnityEngine; [BurstCompile] struct IsUniqueJob : IJob { public NativeArray<int> Values; public NativeArray<bool> IsUnique; public void Execute() { NativeArray<int> counts = new NativeArray<int>(10, Allocator.Temp); for (int i = 0; i < Values.Length; ++i) { counts[Values[i]]++; } for (int i = 0; i < counts.Length; ++i) { if (counts[i] > 1) { IsUnique[0] = false; return; } } IsUnique[0] = true; } } class TestScript : MonoBehaviour { private void Start() { using (var values = new NativeArray<int>( new []{ 1, 2, 3, 4, 5, 6, 7, 8, 9 }, Allocator.TempJob)) { using (var isUnique = new NativeArray<bool>(1, Allocator.TempJob)) { new IsUniqueJob { Values = values, IsUnique = isUnique }.Schedule().Complete(); print(isUnique[0]); } } } }

As expected, this prints true. If we change the array to new []{ 1, 2, 3, 4, 5, 6, 7, 3, 9 } so there are duplicates, it prints false.

Taking a peak at the Burst Inspector, we see the same UnsafeUtility.Malloc calls as when we were calling it directly. We also see usage of AtomicSafetyHandle and UnsafeUtility.MemClear, which NativeArray calls internally.

push r14 push rbx sub rsp, 24 mov rbx, rdi movabs rax, offset ".LUnity.Collections.LowLevel.Unsafe.UnsafeUtility::Malloc_Ptr" mov edi, 40 mov esi, 4 mov edx, 2 call qword ptr [rax] mov r14, rax movabs rax, offset ".LUnity.Collections.LowLevel.Unsafe.AtomicSafetyHandle::GetTempMemoryHandle_Injected_Ptr" lea rdi, [rsp + 8] call qword ptr [rax] movabs rax, offset ".LUnity.Collections.LowLevel.Unsafe.UnsafeUtility::MemClear_Ptr" mov esi, 40 mov rdi, r14 call qword ptr [rax] cmp dword ptr [rbx + 8], 0 jle .LBB0_3 xor eax, eax .LBB0_2: mov rcx, qword ptr [rbx] movsxd rcx, dword ptr [rcx + 4*rax] inc dword ptr [r14 + 4*rcx] inc rax movsxd rcx, dword ptr [rbx + 8] cmp rax, rcx jl .LBB0_2 .LBB0_3: cmp dword ptr [r14], 1 jg .LBB0_4 cmp dword ptr [r14 + 4], 1 jg .LBB0_4 cmp dword ptr [r14 + 8], 1 jg .LBB0_4 cmp dword ptr [r14 + 12], 1 jg .LBB0_4 cmp dword ptr [r14 + 16], 1 jg .LBB0_4 cmp dword ptr [r14 + 20], 1 jg .LBB0_4 cmp dword ptr [r14 + 24], 1 jg .LBB0_4 cmp dword ptr [r14 + 28], 1 jg .LBB0_4 cmp dword ptr [r14 + 32], 1 jle .LBB0_21 .LBB0_4: xor eax, eax .LBB0_22: mov rcx, qword ptr [rbx + 56] mov byte ptr [rcx], al add rsp, 24 pop rbx pop r14 ret .LBB0_21: cmp dword ptr [r14 + 36], 2 setl al jmp .LBB0_22

Conclusions

The Temp allocator is fully usable from within jobs, either directly with UnsafeUtility.Malloc or indirectly with collections like NativeArray. Burst compiles it to actual dynamic memory allocation calls within the Unity engine. These allocations behave just like allocations outside of a job as they are apparently backed by a fixed-sized block of memory that’s allocated sequentially before “overflowing” to another allocator

The fixed-sized block used by the Temp allocator inside the job appears to be distinct from the fixed-sized block used outside the block. There may be one fixed-sized block per thread, per job, or for all jobs but we don’t really know at this point. Even overflow allocations are allowed within the job. None of these allocations require explicit deallocation and that may even be a bad idea.

The TempJob and Persistent allocators, on the other hand, cause Unity to hang and should not be used from within a job.

#1 by Benjamin Guihaire on January 27th, 2020 ·

Careful with Allocator.Temp if the job is a lazy job that can last more than one frame !

#2 by jackson on January 28th, 2020 ·

Excellent point! I will definitely look into this.