What are Your Latency Requirements?

Unity’s Mike Acton gave a talk this week at GDC titled Everyone watching this is fired. One point he made was regarding the importance of knowing the latency requirements of our code. When does the result need to be ready? Today we’ll talk about the ramifications of answering that question with anything other than “not immediately” and see how that can lead to better code.

Mike says a good engineer should be able to state the following:

I can articulate the (various) latency requirements for my current problem.

He indicated in passing that the answer is not always “immediately” and alluded to the issues that crop up when results are simply returned from a function.

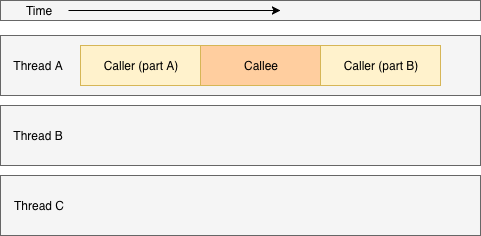

This is an intriguing point as most Unity code is written to simply run synchronously and return its result. However, there are large downsides to this usual approach. The major issue is that it becomes much more difficult to multi-thread such code. By their nature, the body of a function executes on the same thread as the body of the calling function. This execution takes place immediately and the calling function resumes immediately after the called function executes. Here’s how that looks on a system with three threads:

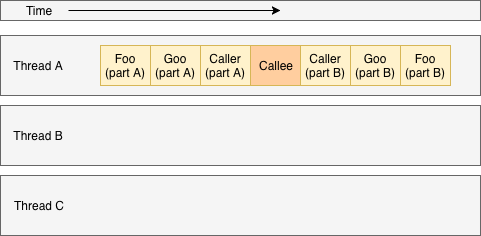

Now imagine that Foo calls Goo which calls Caller. All of these functions execute on the same thread synchronously like this:

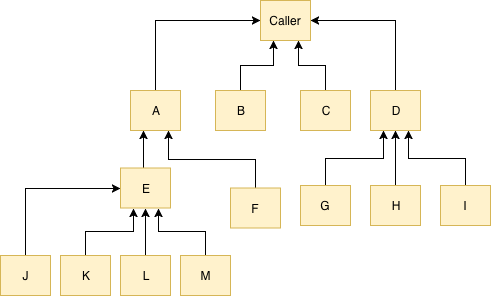

This is a relatively small call stack. In real games we end up with very deep stacks of synchronous dependencies. We may also have many functions that indirectly end up calling Caller. We may end up with a dependency tree like this:

Now imagine that Callee is determined to be slow and we want to move it onto another thread so it can run on another CPU core. All of the functions directly and indirectly calling it now need to be updated because the result they were expecting immediately is no longer provided that way. We may end up needing to change dozens or even hundreds of functions. The amount of work may be deemed too much or may take more time than is available so it may simply never happen and the performance problem may never be solved.

Even if the work is done to update the whole synchronous call tree, many issues may occur as a result of it. Now that Callee is running simultaneously with the myriad functions that used to call it directly, it’s now possible that they’ll contend for some shared memory. To fix this, we may end up adding mutexes or lock blocks to synchronize the threads’ access to the shared memory. Since the code was never designed for this in the first place, it may be very difficult to get this right and bugs may result.

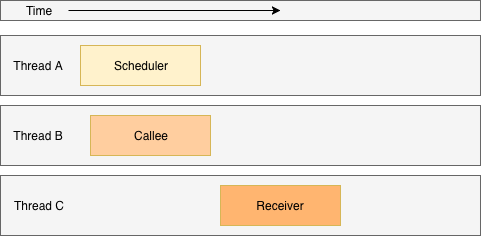

Now let’s assume an alternative design was taken from the start. In this case we’ll convert Callee into a Unity job. Instead of calling it directly or using IJob.Execute, we’ll use IJob.Schedule. Then we can use the returned JobHandle as a dependency when scheduling more jobs or we can simply call Complete on it when we really need the result. Unity’s job system naturally spreads our work out across threads with this design and we end up having our functions run on several threads like this:

Since Unity’s job system is resource-aware, we automatically have error-checking in place to alert us when we have a potential conflict among job fields such as a NativeArray. There’s no need to manually synchronize with lock blocks, which is notoriously error-prone and oftentimes quite inefficient.

This arrangement allows us to provide results at a latency greater than “right now.” If we think about our problem and realize we don’t need the resulting data until later on in the frame, next frame, or even several seconds later, we can simply not call JobHandle.Complete until that later point in time. Meanwhile, we’ll be making good use of the CPU’s cores and be sure we’re thread-safe.

Of course this is not to say that all functions should be converted into Unity jobs. Most functions run so fast they’ll never need to be offloaded to another CPU core. The latency requirements of functions like Math.Sqrt really are “right now.” However, whenever we’re planning out a system we should look for opportunities to instead answer “later” because that allows us to better parallelize the work and keep our code more flexible at the same time. If we end up wanting the result synchronously, we can always call IJob.Execute.

To read the rest of Mike’s talk, check out the slides.

#1 by Josh K on March 25th, 2019 ·

This was a great talk. Glad to get more detail here. When is your GDC talk? ;)

#2 by Nawar on March 27th, 2019 ·

I couldn’t attend the talk as I had Summit Only pass. I was looking forward to it. Thanks for the post!