FastList: A Solution to List’s GC Problems?

As we know, foreach loops create garbage when used with a List<T>. This happens the first time you iterate over one and it happens every time thereafter. A comment on that article shared a link to a class called FastList that was written expressly to solve the GC issue. Does it? How does its performance compare to plain old List? Today’s article puts it to the test to find out!

Reading through the FastList source code it quickly became apparent to me that it’s a wrapper around the regular System.Collections.Generic.List class. It actually derives from that class and inherits almost all of the functionality you get with List. The one part it customizes is at the heart of foreach loops: GetEnumerator.

The customized GetEnumerator uses an existing enumerator cached as a private field, if available. This means that subsequent foreach loops will reuse the enumerator fields rather than creating new garbage… mostly. Since an enumerator can only be used by one foreach loop at a time, a new enumerator will still have to be created if there is more than one loop going on at a time. This could be due to multiple threads looping over the same FastList or just direct usage of GetEnumerator rather than simple foreach loops.

What happens on the first call to GetEnumerator? Well, FastList has a nested CustomEnumerator class that it instantiates. This creates garbage, but only this first time due to the caching described above. CustomEnumerator implements IEnumerator and IEnumerator<T> so that it is a valid candidate for GetEnumerator to return. It also collaborates with FastList to allow itself to be reset (via MyReset) and to use FastList to get the real enumerator from List (via GetBaseEnumerator). Other than that, it’s a wrapper class too! It just wraps the calls to IEnumerator interface functions by passing them along to the enumerator it got from List.

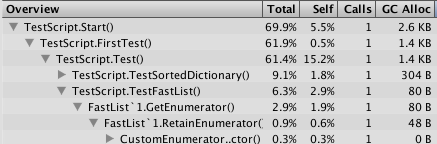

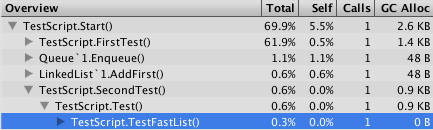

So does it deliver on the promise of not creating garbage? To see, I plugged it into the same test app from the original article and observed the Unity Profiler in “deep” mode. Here’s what I found:

As you can see, the first foreach loop causes 80 bytes to be allocated. You might remember that List allocated only 40 bytes so FastList starts off at a disadvantage. However, the second foreach loop uses the cached enumerator and doesn’t create any garbage at all! List, on the other hand, created another 40 bytes. So after just two loops the score is even, but each subsequent loop would cause List to allocate yet-another 40 bytes.

That’s a pretty nice win for FastList, but does it come at a performance price? To find out, I started with the performance test in this article and modified it so that all the test loops use foreach and added FastList. Here’s the final test script:

using System.Collections.Generic; using System.Reflection; using System.Runtime; using UnityEngine; public class TestScript : MonoBehaviour { private string report; void Start() { GCSettings.LatencyMode = GCLatencyMode.LowLatency; report = string.Join( "\n", new string[]{ "Test,Array Time,List Time,LinkedList Time,FastList Time", Test(1000000), Test(2000000), Test(3000000), Test(4000000), Test(5000000), Test(6000000), Test(7000000), Test(8000000), Test(9000000), Test(10000000) } ); } string Test(int length) { var stopwatch = new System.Diagnostics.Stopwatch(); var array = new int[length]; var list = new List<int>(); var linkedList = new LinkedList<int>(); var fastList = new FastList<int>(); for (var i = 0; i < length; ++i) { array[i] = i; list.Add(i); linkedList.AddLast(i); fastList.Add(i); } int sum; stopwatch.Reset(); stopwatch.Start(); sum = 0; foreach (var i in array) { sum += i; } var arrayTime = stopwatch.ElapsedMilliseconds; stopwatch.Reset(); stopwatch.Start(); sum = 0; foreach (var i in list) { sum += i; } var listTime = stopwatch.ElapsedMilliseconds; stopwatch.Reset(); stopwatch.Start(); sum = 0; foreach (var i in linkedList) { sum += i; } var linkedListTime = stopwatch.ElapsedMilliseconds; stopwatch.Reset(); stopwatch.Start(); sum = 0; foreach (var i in fastList) { sum += i; } var fastListTime = stopwatch.ElapsedMilliseconds; return string.Join( ",", new string[] { length.ToString(), arrayTime.ToString(), listTime.ToString(), linkedListTime.ToString(), fastListTime.ToString() } ); } void OnGUI() { GUI.TextArea(new Rect(0, 0, Screen.width, Screen.height), report); } }

If you want to try out the test yourself, simply paste the above code into a TestScript.cs file in your Unity project’s Assets directory and attach it to the main camera game object in a new, empty project. Then build in non-development mode for 64-bit processors and run it windowed at 640×480 with fastest graphics. I ran it that way on this machine:

- 2.3 Ghz Intel Core i7-3615QM

- Mac OS X 10.11.3

- Unity 5.3.2f1, Mac OS X Standalone, x86_64, non-development

- 640×480, Fastest, Windowed

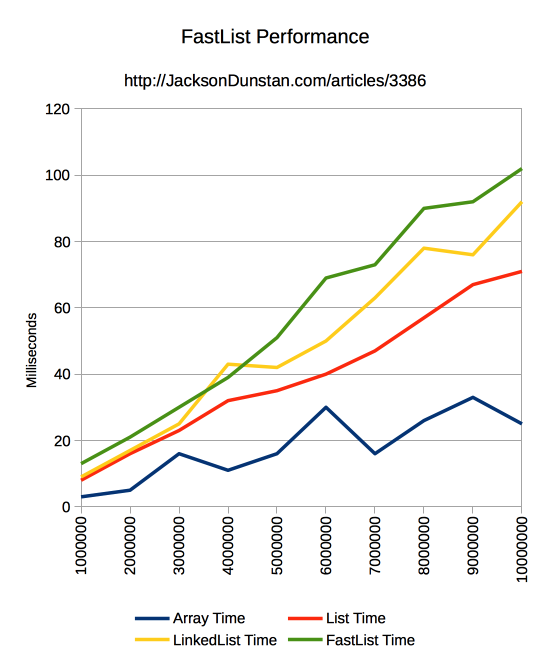

And here are the results I got:

| Test | Array Time | List Time | LinkedList Time | FastList Time |

|---|---|---|---|---|

| 1000000 | 3 | 8 | 9 | 13 |

| 2000000 | 5 | 16 | 17 | 21 |

| 3000000 | 16 | 23 | 25 | 30 |

| 4000000 | 11 | 32 | 43 | 39 |

| 5000000 | 16 | 35 | 42 | 51 |

| 6000000 | 30 | 40 | 50 | 69 |

| 7000000 | 16 | 47 | 63 | 73 |

| 8000000 | 26 | 57 | 78 | 90 |

| 9000000 | 33 | 67 | 76 | 92 |

| 10000000 | 25 | 71 | 92 | 102 |

Charts like this will always have some noise, but you can see that the overall trend is pretty clear. The three list classes—List, FastList, and LinkedList—all scaled up at about a 45 degree angle: linear performance. Array used the CPU cache to do even better than linear performance due to some special case code that makes its foreach loop much more efficient and produce no garbage. FastList was the slowest of the bunch, but not by a tremendous margin. It probably suffers from extra function call overhead due to the wrapping code that makes it work.

In the end it looks like we have a solid class to get the benefits of List with much less garbage creation, provided we loop over it at least twice with foreach. The performance is nearly as good, too. Is that good enough to use it in your project? Let me know if you’re tempted in the comments!

#1 by Kristián on March 7th, 2016 ·

And what if I use simply just for loops when working with List ?? .. is there any garbage created, too ?? ..

#2 by jackson on March 7th, 2016 ·

Nope, only

foreachloops which callGetEnumeratorcreate any garbage. They’re also faster thanforeach, just not as nice to use or as flexible (e.g. for working withIEnumerableinstead of plain arrays).#3 by focus on April 23rd, 2016 ·

Thanks for looking into FastList, Jackson! =)

#4 by Yuri Tikhomirov on April 23rd, 2016 ·

Hey Jackson!

According to your performance test, I see now “FastList” is not the best name for that kind of implementation =)

But overall the purpose was to rapidly make a list that won’t produce garbage when it is being enumerated using IEnumerator (foreach, but not passing this list as IEnumerable since it actually does not overrides GetEnumerator() method).

So it have very limited usage as described in the very first lines of comments there.

Also I’d like to specify the following:

1. This list is totally not thread safe, it could not be used without care in multithreaded environment. It’s actually made for Unity to work within main thread. Enumerating this list using foreach loop in different threads will probably lead to major failures since different threads could possibly retain control over the same instance of CustomEnumerator.

2. I’m not sure I understand this term correctly, but as for me, producing garbage is not the same as allocating object. When you make screenshots from Unity Profiler, you could see the column is named “GC Alloc”, this means, it show how many bytes of memory is allocated during this frame. And it tells nothing about garbage collection. So, what I want to say, you are not producing garbage when allocating objects – until this objects are ‘alive’, they are not supposed to be treated as garbage =) But when you ‘release’ all the references to that object, that’s the point from which the garbage collection may occur and when you actually ‘producing garbage’.

So, as from my point, it’s totally ok when Start() methods allocates objects (‘GC Alloc’), but it is total failure when each Update() allocates any object of any size – of course except the transitions from state to state. (I mean in most cases Update() should strongly not be designed such ways that it makes GC alloc every frame, like Update() { scoreText = playerScore.ToString(); })

Now let’s get back to that FastList. It’s a really rapid solution that was made to avoid multiple GC allocations on each frame where foreach is used over number of lists. It is built over a standart list and it inherits all it’s problems you mentioned in this and previous articles. But as for me, until your scripts is not a real bottleneck (let’s say, all your Updates takes 1ms, but rendering takes 10ms), it is okay to use that kind of FastList in a purposes to keep some objects that is being ‘touched’ each frame using foreach.

Anyway, thanks for your article. It actually tells me again that naming of entities is very important in programming =)

#5 by jackson on April 23rd, 2016 ·

Hey Yuri!

Thanks for adding these points. It’s definitely important to mention that

FastListisn’t thread-safe and that’s not something I mentioned in the article.As for “GC Alloc”, you’re right that the GC only needs to collect when you’ve released all references to an object that was allocated. For example, this will have 200 KB of “GC Alloc” even though the string isn’t ever garbage-collected:

The point is more that the allocation has occurred and eventually the garbage will need to be collected. For temporary operations such as a

foreachloop, “eventually” is “right after the loop”.FastListhas the advantage overListhere because it doesn’t release the enumerator right after the list for garbage collection. We can see this in the second test run (i.e.TestScript.SecondTest) whereFastListhas no “GC Alloc” butListdoes.Thanks again for posting such a thorough comment! Let me know if you have any followups to

FastListor other experiments that I can check out.