Compression Speed

Flash makes it very easy to compress data- just call ByteArray.compress. It’s just as easy to uncompress with ByteArray.uncompress. With such convenience, it’s tempting to compress every ByteArray you send across without a second thought. But is this really a good idea? Will compressing every packet you send over a socket slow your app to a standstill? Today’s test is designed to answer just this question. Read on for the test and results!

The following test is a little different from the normal, straightforward tests you usually see in my articles. The trouble is that setting up a ByteArray to compress or uncompress is quite expensive. To keep the results clean, the test first sets up a randomized ByteArray and measures just the time taken to copy those bytes to a fresh ByteArray. Then each test proceeds to do a copy of the original ByteArray and either compress or uncompress it. That said, here’s the test app for the deflate and zlib algorithms with 1 KB and 1 MB sizes:

package { import flash.display.*; import flash.utils.*; import flash.text.*; public class CompressionSpeed extends Sprite { private var logger:TextField = new TextField(); private function row(...cols): void { logger.appendText(cols.join(",") + "\n"); } public function CompressionSpeed() { logger.autoSize = TextFieldAutoSize.LEFT; addChild(logger); row( "Size", "deflate (compress)", "zlib (compress)", "deflate (uncompress)", "zlib (uncompress)" ); runTests("1 KB", 1024, 1024); runTests("1 MB", 1024*1024, 1); } private function runTests(label:String, size:int, reps:int): void { var beforeTime:int; var afterTime:int; var emptyTime:int; var deflateTimeCompress:int; var zlibTimeCompress:int; var deflateTimeUncompress:int; var zlibTimeUncompress:int; var bytes:ByteArray = new ByteArray(); var originalBytes:ByteArray = new ByteArray(); var compressedBytes:ByteArray = new ByteArray(); var i:int; var zlib:String = CompressionAlgorithm.ZLIB; var deflate:String = CompressionAlgorithm.DEFLATE; fillBytes(originalBytes, size); // Empty beforeTime = getTimer(); for (i = 0; i < reps; ++i) { copyBytes(originalBytes, bytes); } afterTime = getTimer(); emptyTime = afterTime - beforeTime; // Compress beforeTime = getTimer(); for (i = 0; i < reps; ++i) { copyBytes(originalBytes, bytes); bytes.compress(deflate); } afterTime = getTimer(); deflateTimeCompress = afterTime - beforeTime - emptyTime; beforeTime = getTimer(); for (i = 0; i < reps; ++i) { copyBytes(originalBytes, bytes); bytes.compress(zlib); } afterTime = getTimer(); zlibTimeCompress = afterTime - beforeTime - emptyTime; // Uncompress copyBytes(originalBytes, compressedBytes); compressedBytes.compress(deflate); beforeTime = getTimer(); for (i = 0; i < reps; ++i) { copyBytes(compressedBytes, bytes); bytes.uncompress(deflate); } afterTime = getTimer(); deflateTimeUncompress = afterTime - beforeTime - emptyTime; copyBytes(originalBytes, compressedBytes); compressedBytes.compress(zlib); beforeTime = getTimer(); for (i = 0; i < reps; ++i) { copyBytes(compressedBytes, bytes); bytes.uncompress(zlib); } afterTime = getTimer(); zlibTimeUncompress = afterTime - beforeTime - emptyTime; row( label, deflateTimeCompress, zlibTimeCompress, deflateTimeUncompress, zlibTimeUncompress ); } private function fillBytes(bytes:ByteArray, size:int): void { bytes.length = 0; bytes.position = 0; for (var i:int; i < size; ++i) { bytes.writeByte(Math.random()*256); } bytes.position = 0; } private function copyBytes(bytes:ByteArray, into:ByteArray): void { bytes.position = 0; into.position = 0; into.length = 0; into.writeBytes(bytes); bytes.position = 0; into.position = 0; } } }

I ran this test in the following environment:

- Flex SDK (MXMLC) 4.5.1.21328, compiling in release mode (no debugging or verbose stack traces)

- Release version of Flash Player 11.1.102.55

- 2.4 Ghz Intel Core i5

- Mac OS X 10.7.3

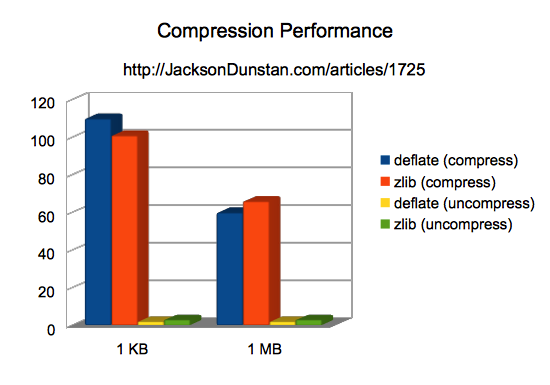

Here are the results I got:

| Size | deflate (compress) | zlib (compress) | deflate (uncompress) | zlib (uncompress) |

|---|---|---|---|---|

| 1 KB | 110 | 101 | 2 | 3 |

| 1 MB | 60 | 66 | 2 | 3 |

From this data we can derive a few conclusions:

compressis much slower thanuncompressdeflateandzlibare about as fast as each other- The overhead of compressing or uncompressing on small amounts of data is large- it’s about twice as slow as larger amounts

The original question was whether your app would grind to a halt if you compressed and uncompressed every ByteArray you came across. Well, the above test is on a total of 1 MB of data: either 1024 compress/uncompress operations on a 1 KB ByteArray or 1 operation on a 1 MB block. Presumably, if you’re dealing with lots of ByteArray objects, they’re probably small. Still, on the test machine for this article that 1 KB ByteArray could be compressed about 100,000 times per second or uncompressed about 200,000 times per second. Even in a real-time game running at 60 FPS, the same ByteArray could be compressed over 1600 times per frame or uncompressed over 3200 times per frame. At an average of about one compressed network packet per frame, the compression overhead would only take up about 0.06% of the total frame time on the CPU. So will compressing everything you come across slow you down? Probably not!

Spot a bug? Have a question or suggestion? Post a comment!

#1 by Henke37 on February 13th, 2012 ·

Of course the algorithms show similar behavior, IIRC, they are using the same compression code, just that one of them deal with a few more headers.

Now here is a fun experiment: test out the speed of the audio decoders. ADPCM does horrible.

#2 by jackson on February 13th, 2012 ·

Good point, and something I should have mentioned in the article. I figured I’d test both anyhow just to see if one was noticeably faster than the other due to those headers. Stranger things have happened in Flash. :)

I’ll make a note of the audio decoders. I don’t think I have a single audio article, so that’d be a good addition. Thanks for the tip.

#3 by AlexG on February 14th, 2012 ·

How about testing different ways of multiple bitmapData render? Using different sizes chunks or stage3D API to find the fastest way to render a bitmapData or a big size bitmapData using copyPixels() method and so on.

#4 by jackson on February 14th, 2012 ·

On one hand, these kinds of tests could be incredibly useful. On the other, they’re incredibly device-specific. Perhaps some day I’ll set up a big comprehensive test and try it out on a bunch of devices (e.g. desktop/hardware-rendering, desktop/software-rendering, iPad, iPod Touch, various Androids) with a bunch of strategies (e.g.

copyPixels,Stage3Dsoftware,Stage3Dhardware).#5 by ANJO on April 8th, 2012 ·

can anyone Help me

Im losing hope for fixing this,

First, I convert bitmapData to ByteArray. and save it to my local folder using Shared Object.

private function bitmapDataToByteArray(bmd:*):ByteArray

{

var byteArray:ByteArray;

byteArray = bmd.getPixels(bmd.rect);

byteArray.writeShort(bmd.width);

byteArray.writeShort(bmd.height);

byteArray.writeBoolean(bmd.transparent);

byteArray.compress()

return byteArray;

}

then I load the byteArray from sharedObject and convert it to bitmapData, it using this,

private function byteArrayToBitmapData(bytes :ByteArray):BitmapData

{

if(bytes == null){

throw new Error(“bytes parameter can not be empty!”);

}

bytes.uncompress();

if(bytes.length < 6){

throw new Error("bytes parameter is a invalid value");

}

bytes.position = bytes.length – 1;

var transparent:Boolean = bytes.readBoolean();

bytes.position = bytes.length – 3;

var height:int = bytes.readShort();

bytes.position = bytes.length – 5;

var width:int = bytes.readShort();

bytes.position = 0;

var datas:ByteArray = new ByteArray();

bytes.readBytes(datas, 0, bytes.length – 5);

var bmp:BitmapData = new BitmapData(width,height,transparent,0);

bmp.setPixels(new Rectangle(0,0,width,height),datas);

return bmp;

}

BUT WHEN I CLOSE MY FLASH (SWF)

And SEE the PROCCESS "ITS STILL RUNNING ON THE PROCESS"

Im losing hope on these can anyone help me.. thanks

#6 by jackson on April 8th, 2012 ·

That sounds like a Flash Player bug. Have you filed a bug with Adobe?

#7 by Gary Paluk on October 26th, 2012 ·

It might be worth detailing the final compressed size of these files. The amount of compression achieved could possibly be a deciding factor to a developers decision to compress their data in the first place or swing them from zlib to deflate algorithms. Obviously different file structures create different results, but perhaps testing a range and then averaging the results would provide a more rounded viewpoint on a nice article.

#8 by jackson on October 26th, 2012 ·

That should definitely be a consideration, if not the most important consideration in compression choice. I think it’s a bit outside the scope of this article to detail the range of compression ratios you’ll get from the various algorithms, but luckily this work has been done by others.

#9 by Gary Paluk on October 26th, 2012 ·

In fact I’ve done some primitive tests myself and even though I’d say test this out yourself for your particular filetypes etc, here are my results.

I’m catching user events and storing them along side a timestamp and event type. I’m writing the timestamp as a short (Time is broken into fixed time chunks over a small period of time so a short is enough for me here) and an action which is stored as a char, I have a simple header containing a small utf-8 string, version control and some copyright information, this header is 0.08kB before compression. For 1.00kB of uncompressed data, my compression results are averaging around:

ZLIB: 0.75kB

DEFLATE: 0.71kB

Meaning that the ‘Deflate’ algorithm is working out 4% better with this real-world data. I hope that this is helpful to someone. :)