Introduction to AGAL: Part 1

Flash 11’s new Stage3D class introduces a whole new kind of bytecode to Flash: AGAL. Today I’m beginning a series of articles to talk about what AGAL is in the first place, how you can generate its bytecode and, more generally, how these wacky shaders work. Read on for the first article in the series and learn the basics of AGAL.

First things first we need to clear up a bit of terminology. The term AGAL is used to mean two related things. First, it is the name of the Stage3D shader bytecode format. In this way, it’s like talking about AVM2 bytecode that we’re used to generating when we compile our AS3, C++ (with Alchemy), or HaXE. Second, it is the name of an assembly language that is assembled into AGAL bytecode. I will refer to these as “AGAL bytecode” and “AGAL assembly” to keep the two clear.

It’s worth noting that AGAL assembly is not the only way to generate AGAL bytecode. Currently, these are the ways to generate it (other than a hex editor):

| Language | Language Type | Compiler/Assembler | API |

|---|---|---|---|

| AGAL | Assembly | Adobe’s AGALMiniAssembler AS3 class | None (directly use Stage3D) |

| Pixel Bender 3D | High-Level | Adobe’s beta compiler | Pixel Bender 3D API |

| HxSL | High-Level | HaXe | HaXe Language |

Of these, AGAL assembly is unique in that it provides you with the lowest level code so you can squeeze out the most performance and pack in the most features of any of the language options. It also doesn’t require any bulky APIs or switching to HaXe, which is an appealing bonus. On the downside, you have to write all of your shaders in an assembly language and therefore end up typing a lot more code that is harder to read. However, shaders are virtually always performance-critical code, so this isn’t an area where you want to skimp on optimization. You also won’t be writing very much shader code compared to your app or game, so it’s not as important that you be able to bang out tens of thousands of lines at breakneck speed. As such, I will only be covering AGAL as it is currently my choice of shader language.

It’s important to understand some basics about how Stage3D shaders work before you actually start writing them. To begin with, they are split into two parts. The first part is called a “vertex shader” and it is responsible for specifying each vertex’s position. The second part is called a “fragment shader” and it is responsible for specifying the color of each fragment, which is roughly one pixel. These two parts combine to form a “shader program” that is uploaded and used via the Stage3D API:

// Create a shader program. It is initially unusable. var shaderProgram:Program3D = myContext3D.createProgram(); // Upload vertex shader and fragment shader AGAL bytecode. It is now usable. shaderProgram.upload(vertexShaderAGAL, fragmentShaderAGAL); // Use the shader program for subsequent draw operations myContext3D.setProgram(shaderProgram); // Draw some triangles with the shader program myContext3D.drawTriangles(someTriangleIndexBuffer);

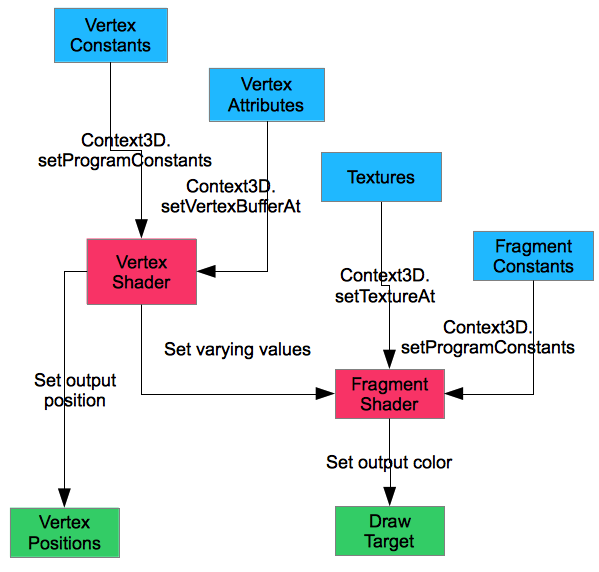

To be able to intelligently set vertex positions and fragment colors, we need to be able to pass data to the shader program. This typically includes camera transformation matrices, textures, colors, normal vectors, and so forth. Here is a diagram describing how this data flows from your AS3 program to the vertex shader to the fragment shader and ultimately to the target of your drawing: the screen or a texture.

Some of these types require a little explanation:

| Data Type | Bound To | Example Uses |

|---|---|---|

| Vertex Constants | Vertex Shader | Transformation matrices, bones (for skeletal animation) |

| Vertex Attributes | Vertices | Positions, normals, colors |

| Varying Values | Vertices | Texture coordinates, colors |

| Textures | Fragment Shaders | Art-driven colors, normal maps |

| Fragment Constants | Fragment Shaders | Fog colors, object-global alpha values |

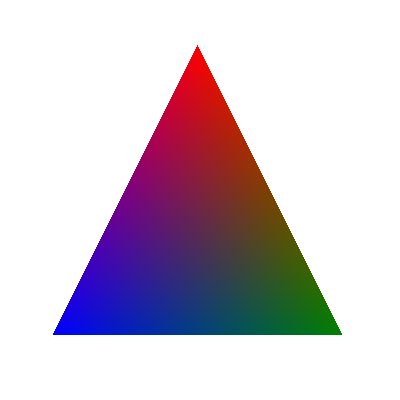

The trickiest of these types are the “varying values”. These values are computed by the vertex shader on a per-vertex basis. When the triangle is drawn, the varying values are interpolated (linearly and with perspective correction) across the surface of the triangle. For example, if your vertex shader outputs red for one vertex of the triangle, green for another, and blue for the third, and the fragment shader simply outputs the varying value, you would get a triangle like this:

As you’ll see in future articles, this is quite useful for efficiently performing many 3D drawing operations. For example, a simple textured triangle could look up the texture coordinate at every fragment of the triangle, but this would be extremely wasteful as such a lookup is quite expensive. It’s much better to lookup the texture coordinate at each of the triangle’s vertices and simply interpolate using a varying value.

That’s all for the first article in the series. Stay tuned for next time when we’ll dig into the AGAL assembly language and start writing some shaders!

#1 by Smily on December 12th, 2011 ·

You noted that “It’s much better to lookup the texture coordinate at each of the triangle’s vertices and simply interpolate using a varying value.”, but according to my tests, it’s not currently possible to read textures from vertex shaders, or is it?

Additionally, have you looked at the AGALMacroAssembler available at https://github.com/flashplatformsdk/Flash-Platform-SDK ? It’s backwards compatible with the mini assembler and provides some useful features (like aliases, simple operations with no temporaries, conditional compilation, etc.) without sacrificing the low-level nature of the code, which is useful for optimization.

#2 by jackson on December 12th, 2011 ·

You can’t (and wouldn’t want to) get the actual pixel color values from the vertex shader, but you can compute the texture coordinates there. For example, the triangle above may have texture coordinates computed for its vertices: (0.5,0)=top, (0,1)=bottom left, (1,1)=bottom right. When these values are allowed to vary over the surface of the triangle, you’d get (0.5,0.5) for the middle pixel and use that to sample the texture’s color value.

As for the AGALMacroAssembler, I hadn’t heard of it but I’ll check it out. Some of those features sound very handy. :)

#3 by Smily on December 12th, 2011 ·

Oh right, I thought you had a secret way to do texture reads, because it’s a bit of a bummer that it limits what you can do by quite a bit, at least more on the GPGPU side (GPU procedural terrain, particle systems, etc.).

On a different note, I’ve also encountered the opcode limits not being as simple as they seem. Some of the more complex operations (at least sin and cos) reduce the amount of opcodes you can use by quite a bit, to the point that you can only have 6-7 sin/cos opcodes and each one reduces the amount of other operations you can do. With no complex operations, you can e.g. do the full 200 mov opcodes, but that can be reduced to only about 40. I’m interested in whether you know anything more about it, if it’s a bug or intentional and where the limitations lie.

Thanks :)

#4 by jackson on December 12th, 2011 ·

I haven’t heard of that limit before. In this article I even do 199

sin/cosinstructions without any problem.#5 by Smily on December 12th, 2011 ·

The plot thickens! Thanks, I’ll take a look at what I did wrong.

#6 by Smily on December 14th, 2011 ·

Actually, I just tried your test in a flash debugger and got the same problem: http://dl.dropbox.com/u/14681/Stage3DNativeShaderFailed.png

I didn’t notice that before, because it was silently failing, but it also explains the changing backgrounds that I got (upload failing means that drawing fails, so the correct color doesn’t get drawn). It might be a problem with my particular config though, but I don’t have anything out of the ordinary really, a GeForce 560 ti with the 285.62 nvidia driver on Win 7. Works fine in CPU mode (if you can call 1fps fine), but fails in GPU mode in the IE debugger and the standalone debugger (as well as silently failing in release plugins and standalone players).

In any way, I just got reminded that I couldn’t possibly be doing anything wrong if the actual native shader compilation is failing, apart from going over an undocumented limit of internal decompiled opcodes or something.

As a side-note, this vertex shader macro assembler code with useless / padded sin opcodes fails for me (it does work with one sin less though):

#7 by AlexG on December 13th, 2011 ·

Nice article. Thanks

Could you make a speed test for visualising bitmaps with copyPixels() and with stage3D methods for BitmapData copying?

#8 by jackson on December 13th, 2011 ·

With engines like Starling getting popular, I’ll definitely be covering something about 2D with

Stage3Din the future. :)#9 by jpauclair on December 14th, 2011 ·

It’s nice to see that you are starting blogging on 3D stuff!

I know that you had access to molehill for quite some time now.

I’m sure it’s the beginning of a whole new series of benchmarking ;)